Today I’m going to talk about some performance considerations about web applications and the advantages of having a memory cache.

Introduction

As noted in my previous memcached post, for most applications, there is some data that can be cached in a local cache, so you don’t have to query it every time a users enters your site, as the exchange rates example in my previous post.

For the exchange rates example, you could choose to store the values locally in several formats, you may choose to store the data in a temporal file in the local file system, a database, a memory cache, or whatever comes to your mind, so you don’t need to query the external service again for the rest of the day.

Sometimes, if you already have a DB running that is used by your application, you may want to reuse that in order to store the temporal data, so you don’t need to install any additional applications, for example memcached. As a developer, it may sometimes become a hard task to convince the sysadmin to install a new software in the environment.

In this post I’ll compare the performance between using a local DB as a cache (Postgre SQL) and a memory cache (Memcached). You may use these comparisons as an argument to move to a memory cache (I mention memcached, but there are more, some I mentioned in my previous post, but you can find more using your preferred search engine 😉 ).

Test Environment

For the test environment I installed a local Postgre SQL sever and a Memcached server, both were running simultaneously during the tests.

For every test I inserted a total of 10000 keys and then read them sequentially. After that, I compared the inserts/second and reads/second and made a graph for each test case. The variables considered were: length of the key (10 to 250 characters), size of value object (10KB to 1MB) and the quantity of threads (1 to 40). This means, the horizontal axis represents the variable, and the vertical axis shows the insertions or reads per second, depending the case.

You can find the source code of the tests here. I didn’t develop the source with the intention of sharing it afterwards, so it may be incomplete (the DB cache only has the put and get methods implemented, not the delete or flush) and a bit hard to read if you don’t speak Spanish. If you want to read it and have questions just ask in the comments.

Test Results

Variable length of key

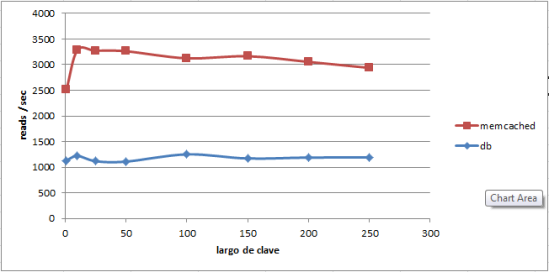

In this test, the variable is the length of the key. The object size is fixed to 6kB. According to memcached, the limit length is 256 characters, so I wondered how this limit affects performance. Here you can see that when adding keys longer than 200 characters, the number of insertions/sec decreases significantly for memcached (red line).

The same tests running against the DB (blue line) show almost a constant number of insertions and reads per second, but far less than memcached. The peak insertions/second in the case of Memcached is 14700 whereas for the DB it is 350.

The reads per second for memcached is more than 3 times the amount of the DB.

Variable object size

In this case the variable is the object size, from 10kB to 1MB. The key length is fixed to 100 characters.

It is very clear how the performance drops in both cases as the size of the inserted object increases. The biggest size inserted is 1MB, since this is the limit for Memcached.

Variable length of key

This last case fixes the length of the key to 100 characters and the size to 6kB. The variable is the number of threads used to read the keys. Every one of these threads read all the 10000 keys stored previously in the cache. In this case, only the read part is important, since the objects are inserted only once into the cache.

Clearly, the reads/second limit for the DB is reached very fast, this is, using more than 4 threads, the reads/sec remain constant between 1500 and 2000.

For memcached, this limit is not reached and adding more threads increases the number of reads/sec. I didn’t try with more than 40 threads, but this shows that from a performance point of view, a single memcached server can be shared 2 or more applications.

Conclusion

In every case, according to the expected result, the memory cache always performs better than the DB, no matter the variable.

Even though memcached looses performance when using big objects or, when using it in a normal range (e.g.: objects smaller than 500kB) it will perform very nice, and more important is that the memcached server can scale very well and also very easy. If the performance isn’t good enough you can always easily add another memcached server in any server with some spare memory and that’s it, the number of reads and writes per second will increase.

Please note, this comparison is not a formal benchmark between these cache because the tests add some overhead. It is developed in Java, and the Java VM has some time and memory overhead. A more formal benchmark would have to use a small footprint language for the client, for example C or Lua. I used Java because my main point wasn’t to get an exact number of inserts a cache can provide, but to get an approximate improvement percentage when using a memory cache, instead of a DB.

I hope you enjoyed reading the post as much as I enjoyed writing it!

Please let me know if you have any comments or suggestions. I would also like you to share experiences using other memory caches, I am open for alternatives 😉 .